Calibration

MPL Calibration Toolbox

We provide an additional, easy-to-use toolbox to execute and reproduce the calibration tasks with detailed instructions. Related configuration files, data sequences, and calibration results can be found below.

Intrinsics

All camera intrinsics, including the focal lengths, the optical center, and the distortion parameters, are calibrated using the official ROS Camera Calibration toolbox and by gently moving in front of a 9 x 6 checkerboard visualized on a computer screen. The choice of a virtual checkerboard enables display in either static or blinking mode. The latter is particularly useful for event camera calibration, as it produces accumulated event images with a sharp appearance of the checkerboard. Images that contain too much blur have been manually removed.

- Left Event Camera Intrinsics: data, results

- Right Event Camera Intrinsics: data, results

- Left Regular Camera Intrinsics: data, results

- Right Regular Camera Intrinsics: data, results

Note that we take the factory calibration result for the RGB-D sensor (intrinsics of both color and depth camera as well as extrinsics between them).

- RGB-D Color Camera Intrinsics: factory results

- RGB-D Depth Camera Intrinsics: factory results

- RGB-D Camera Extrinsics: factory results

- [NEW 2022-05] RGB-D Camera Extrinsics: config, data, results

The IMU intrinsics (i.e. the statistical properties of the accelerometer and gyroscope signals, including bias random walk and noise densities) are calibrated using the Allan Variance ROS toolbox with a 5-hour-long IMU sequence by putting the sensor flat on the ground with no perturbation.

Joint Camera Extrinsic Calibration

In order to determine the extrinsics of the multi-camera system, we point the sensor setup towards the screen and record both static and blinking checkerboard patterns with known size. For each observation, the relative position between screen and sensors is kept still by putting the sensor suite steadily on a tripod. The board is maintained within the field of view of all cameras. Note that here we use the color camera on the RGB-D sensor to jointly calibrate its extrinsics. The extrinsics of the depth camera are obtained by the known internal parameters of the depth camera, and the rolling shutter effect is safely ignored as no motion between cameras and pattern is involved. The extrinsics are calculated by detecting corner points on the checkerboard pattern and applying PnP to the resulting 2D-3D correspondences. The result is refined by Ceres Solver-based reprojection error minimization. We finally validate the estimated extrinsic parameters by analyzing the quality of depth map reprojections and by comparing the result against the measurements from the CAD model.

- Joint Camera Extrinsics (CAD model): CAD readings

- Joint Camera Extrinsics (small-scale): data, results

- Joint Camera Extrinsics (large-scale): data, results

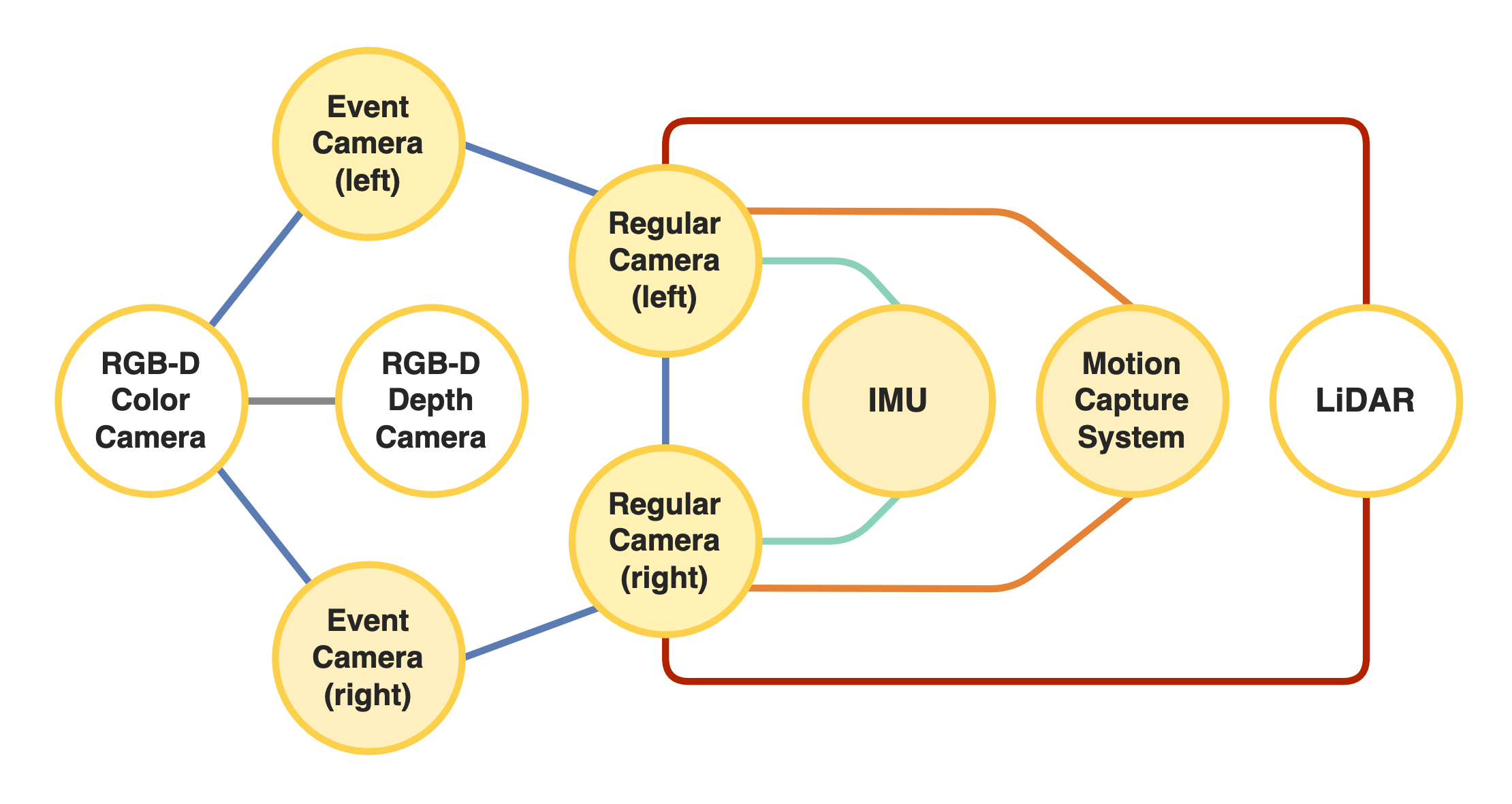

Camera-IMU Extrinsic Calibration

Extrinsic transformation parameters between the IMU and the regular stereo camera are identified using the Kalibr toolbox. The visual-inertial system is directed towards a static 6 x 6 April-grid board, and the board is constantly maintained within the field of view of both regular cameras. All six axes of the IMU are properly excited, and the calibration is conducted under good illumination conditions to further reduce the unwanted side-effects of motion blur. Given prior intrinsics of the regular stereo camera and the IMU and extrinsics between the regular cameras, we limit the calculation to the extrinsics between the IMU and the regular stereo camera, only.

- Camera-IMU Extrinsics (CAD model): CAD readings

- Camera-IMU Extrinsics (small-scale): config, data1, results1 (data2, data3)

- Camera-IMU Extrinsics (large-scale): config, data1, results1 (data2, data3)

Camera-MoCap Hand-eye Calibration

The MoCap system outputs position measurements of the geometric centers of all markers expressed in a MoCap-specific reference frame. In order to compare recovered trajectories against ground truth, we therefore need to identify a euclidean transformation between the MoCap frame of reference and any other sensor frame. A static 7 x 6 checkerboard is maintained within the field of view of both gently-moving cameras, and MoCap pose measurements are simultaneously recorded. Relative poses from both the MoCap system and the cameras are then used to solve the hand-eye calibration problem using the official OpenCV calibrateHandEye API.

Camera-LiDAR Extrinsic Calibration

Our extrinsic calibration between the LiDAR and the cameras bypasses via a high-quality colored point cloud captured by a FARO scanner. The point cloud is captured in an unfurnished room with simple geometric structure and in which we only place a checkerboard. In order to perform the extrinsic calibration, we then record LiDAR scans and corresponding camera images by moving the sensor setup in this room. The cameras are constantly directed at the checkerboard. Next, we estimate the transformation between the FARO and the LiDAR coordinate frames by point cloud registration. Owing to the fact that the FARO scan is very dense and colored, we can furthermore hand-pick 3D points corresponding to checkerboard corners in the real world. By furthermore detecting those points in the camera images, we can again run the PnP method to obtain FARO to camera transformations. To conclude, the extrinsic parameters between LiDAR and cameras are retrieved by concatenating the above two transformations.

- Camera-LiDAR Extrinsics (CAD model): CAD readings

- [UPDATE 2022-08] Camera-LiDAR Extrinsics (large-scale): FARO scanning, raw data, processed data, results